When I was a little girl, nothing was scarier than a stranger. In the late 1980s and early 1990s, our parents, TV specials, and teachers all warned us that strangers wanted to hurt us. “Stranger Danger” was everywhere. It was a well-meaning lesson, but the risk was exaggerated: most child abuse is committed by people the child knows. Abuse by strangers is much rarer.

Rarer, but not impossible. I know because I was sexually exploited by strangers.

From ages five to 13, I was a child actor. While we’ve recently heard many horror stories about abuse behind the scenes, I always felt safe on set. Film sets were regulated spaces where people focused on work. I had supportive parents and was surrounded by directors, actors, and teachers who understood and cared for children.

The only way show business endangered me was by putting me in the public eye. Any cruelty and exploitation I faced came from the public.

“Hollywood throws you into the pool,” I always say, “but it’s the public that holds your head underwater.”

Before I even started high school, my image had been used in child sexual abuse material. I appeared on fetish websites and was Photoshopped into pornography. Grown men sent me creepy letters. I wasn’t a beautiful girl—my awkward phase lasted from about age 10 to 25—and I acted almost exclusively in family-friendly movies. But I was a public figure, so I was accessible. That’s what predators look for: access. And nothing made me more accessible than the internet.

It didn’t matter that those images “weren’t me” or that the sites were “technically” legal. It was a painful, violating experience—a living nightmare I hoped no other child would endure. As an adult, I worried about the kids who came after me. Were similar things happening to Disney stars, the Stranger Things cast, or preteens making TikTok dances and appearing in family vlogs? I wasn’t sure I wanted to know.

When generative AI gained traction a few years ago, I feared the worst. I’d heard about “deepfakes” and knew the technology was becoming exponentially more realistic.

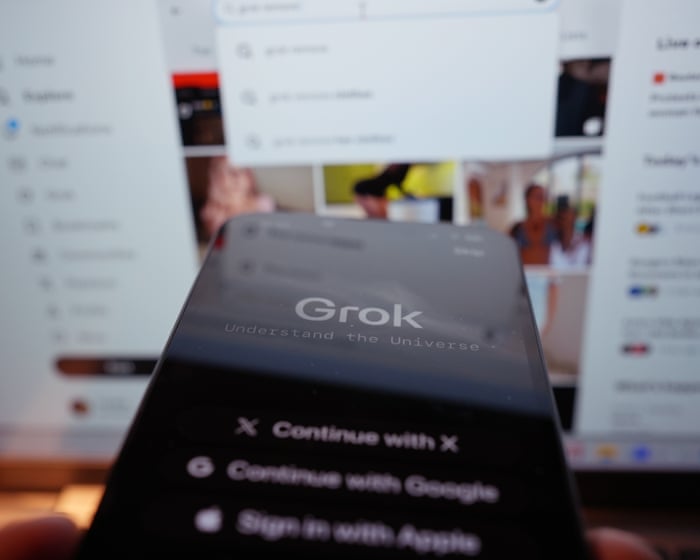

Then it happened—or at least, the world noticed. Generative AI has already been used many times to create sexualized images of adult women without their consent. It happened to friends of mine. But recently, it was reported that X’s AI tool Grok had been used openly to generate undressed images of an underage actor. Weeks earlier, a 13-year-old girl was expelled from school for hitting a classmate who allegedly made deepfake porn of her—around the same age I was when people created fake sexualized images of me.

In July 2024, the Internet Watch Foundation found over 3,500 AI-generated child sexual abuse images on a dark web forum. How many thousands more have been created since?

Generative AI has reinvented Stranger Danger. And this time, the fear is justified. It is now infinitely easier for any child whose face is online to be sexually exploited. Millions of children could be forced to live my nightmare.

To stop this deepfake crisis, we need to examine how AI is trained.

Generative AI “learns” through a repeated process of “look, make, compare, update, repeat,” says Patrick LaVictoire, a mathematician and former AI safety researcher. It creates models based on memorized information, but since it can’t memorize everything, it looks for patterns and bases its responses on those. “A connection that’s…”LaVictoire explains that useful AI behaviors get reinforced, while unhelpful or harmful ones are pruned. What generative AI can produce depends entirely on its training data. A 2023 Stanford study revealed that a popular training dataset contained over 1,000 instances of child sexual abuse material (CSAM). Although those links have since been removed, researchers warn of another danger: AI could generate CSAM by combining innocent images of children with adult pornography if both types are present in the data.

Companies like Google and OpenAI say they have safeguards, such as carefully curating training data. However, it’s important to note that images of many adult performers and sex workers have been scraped for AI without their consent.

LaVictoire points out that generative AI itself cannot distinguish between harmless prompts, like “make an image of a Jedi samurai,” and harmful ones, like “undress this celebrity.” To address this, another layer of AI, similar to a spam filter, can block such queries. xAI, the company behind Grok, appears to have been lax with this filter.

The situation could worsen. Meta and others have proposed making future AI models open source, meaning anyone could access, download, and modify the code. While open-source software typically fosters creativity and collaboration, this freedom could be disastrous for child safety. A downloaded, open-source AI platform could be fine-tuned with explicit or illegal images to create unlimited CSAM or “revenge porn,” with no safeguards in place.

Meta seems to have retreated from making its newer AI platforms fully open source. Perhaps Mark Zuckerberg considered the potential legacy, moving away from a path that could liken him more to an “Oppenheimer of CSAM” than a Roman emperor.

Some countries are taking action. China requires AI-generated content to be labeled. Denmark is drafting legislation to give individuals copyright over their likeness and voice, with fines for non-compliant platforms. In Europe and the UK, protections may also come from regulations like the GDPR.

The outlook in the United States appears grimmer. Copyright claims often fail because user agreements typically grant platforms broad rights to uploaded content. With executive orders opposing AI regulation and companies like xAI partnering with the military, the U.S. government seems to prioritize AI profits over public safety.

New York litigator Akiva Cohen notes recent laws that criminalize some digital manipulation, but says they are often overly restrictive. For instance, creating a deepfake that shows someone nude or in a sexual act might be criminal, but using AI to put a woman—or even an underage girl—in a bikini likely would not.

“A lot of this very consciously stays just on the ‘horrific, but legal’ side of the line,” says Cohen. While such acts may not be criminal offenses against the state, Cohen argues they could be civil liabilities, violating a person’s rights and requiring restitution. He suggests this falls under torts like “false light” or “invasion of privacy.”One form of wrongdoing involves making offensive claims about a person, portraying them in a false light—essentially showing someone doing something they never actually did.

“The way to truly deter this kind of behavior is by holding the companies that enable it accountable,” says Cohen.

There is legal precedent for this: New York’s Raise Act and California’s Senate Bill 53 state that AI companies can be held liable for harms they cause beyond a certain point. Meanwhile, X has announced it will block its AI tool Grok from generating sexualized images of real people on its platform—though this policy change does not seem to apply to the standalone Grok app.

Josh Saviano, a former attorney in New York and a former child actor, believes more immediate action is needed alongside legislation.

“Lobbying efforts and the courts will ultimately address this,” Saviano says. “But until then, there are two options: abstain completely by removing your entire digital footprint from the internet, or find a technological solution.”

Protecting young people is especially important to Saviano, who knows individuals affected by deepfakes and, from his own experience as a child actor, understands what it’s like to lose control of your own story. He and his team are developing a tool to detect and alert people when their images or creative work are being scraped online. Their motto, he says, is: “Protect the babies.”

However it happens, I believe defending against this threat will require significant public effort.

While some are growing attached to their AI chatbots, most people still view tech companies as little more than utilities. We might prefer one app over another for personal or political reasons, but strong brand loyalty is rare. Tech companies—especially social media platforms like Meta and X—should remember they are a means to an end. If someone like me, who was on Twitter every day for over a decade, can leave it, anyone can.

But boycotts alone aren’t enough. We must demand that companies allowing the creation of child sexual abuse material be held responsible. We need to push for legislation and technological safeguards. We also have to examine our own behavior: no one wants to think that sharing photos of their child could lead to those images being used in abusive material. Yet it’s a real risk—one parents must guard against for young children and educate older children about.

If our past focus on “Stranger Danger” taught us anything, it’s that most people want to prevent the endangerment and harassment of children. Now it’s time to prove it.

Mara Wilson is a writer and actor based in Los Angeles.

Frequently Asked Questions

Of course Here is a list of FAQs about the deeply concerning issue of personal photos being misused in abusive content and the new threat posed by AIgenerated imagery

Understanding the Core Problem

Q What does it mean when someone says their photo was used in child abuse material

A It means a personal nonsexual photo of them was taken without consent and digitally altered or placed into sexually abusive images or videos This is a severe form of imagebased sexual abuse

Q How is AI making this problem worse

A AI tools can now generate highly realistic fake images and videos Perpetrators can use a single innocent photo to create new fabricated abusive content making the victimization endless and the original photo impossible to fully remove from circulation

Q Ive heard the term deepfake is that what this is

A Yes in this context A deepfake uses AI to superimpose a persons face onto another persons body in a video or image When this is done to create abusive content it is a form of digital forgery and a serious crime

For Victims and Those Worried About Becoming a Victim

Q What should I do if I discover my photo has been misused this way

A 1 Dont delete evidence Take screenshots with URLs 2 Report immediately to the platform where you found it 3 File a report with law enforcement 4 Contact a support organization like the Cyber Civil Rights Initiative or RAINN for help

Q Can I get these AIgenerated fakes taken off the internet

A Its challenging but possible You must report each instance to the hosting platform Many major platforms have policies against nonconsensual intimate imagery There are also services like Take It Down that can help prevent known images from being shared

Q How can I protect my photos from being misused by AI

A While no method is foolproof you can be very selective about what you share publicly online use strict privacy settings avoid posting highresolution photos and consider using digital watermarks Be cautious of apps that use