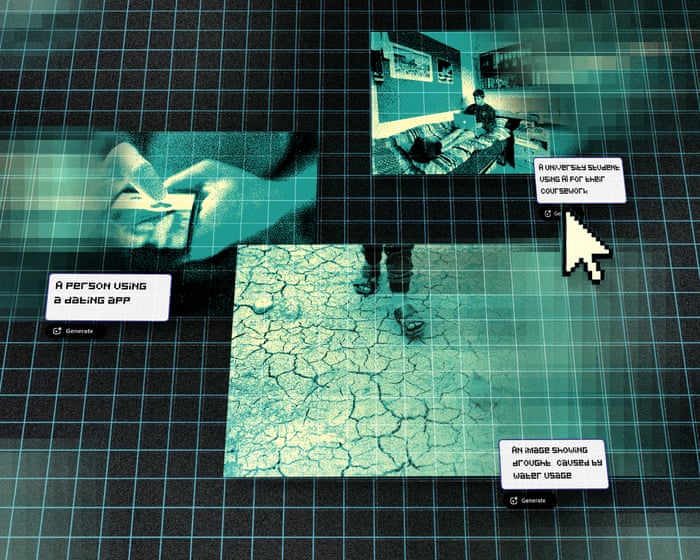

What’s Real and What’s Fake? Can We Even Tell?

Sumaiya Motara

Freelance journalist based in Preston, working in broadcasting and local democracy reporting

A family member recently showed me a Facebook video of Donald Trump accusing India of violating a ceasefire with Pakistan. If it hadn’t been so unlike him, I might have believed it too. After checking news sources, I realized the video was AI-generated. But when I explained this, my relative refused to accept it—because it looked real. Without my intervention, they would have shared it with dozens of people.

Another time, a TikTok video popped up on my feed showing male migrants arriving in the UK by boat. One man vlogged, “We survived this dangerous journey—now off to the five-star Marriott!” The account, migrantvlog, posted 22 clips in just a few days, showing them thanking Labour for “free” buffets, celebrating £2,000 e-bikes for Deliveroo deliveries, and burning the Union Jack. The video gained nearly 380,000 views in a month.

Even though the AI glitches weren’t obvious—no disappearing limbs or floating plates—the blurry backgrounds and unnatural movements gave it away. But did the thousands of viewers notice? Judging by the racist, anti-immigrant comments flooding the section, most didn’t.

This blurring of truth and fiction terrifies me. The Online Safety Act targets state-backed disinformation, but what about everyday people spreading fake videos without realizing? Last summer’s riots were fueled by AI-generated images, with only fact-checkers like Full Fact trying to set the record straight. I worry for those less media-savvy, who fall for these lies and add fuel to the fire.

AI can tell compelling stories—but who controls the narrative?

Rukanah Mogra

Leicester-based journalist working in sports media and digital communications with Harborough Town FC

The first time I used AI for work was to help with a match report. I was on deadline, exhausted, and my intro wasn’t working. I fed my notes into an AI tool, and to my surprise, it suggested a headline and opening that actually worked. It saved me time—a relief when minutes mattered.

But AI isn’t magic. It can tidy up clunky sentences and trim wordiness, but it can’t chase sources, capture atmosphere, or sense when a story needs to pivot. Those decisions still fall to me.

What makes AI useful is that it feels like a judgment-free editor. As a young freelancer, I don’t always have access to regular feedback. Sharing early drafts with a human editor can feel vulnerable, especially when you’re still finding your voice. ChatGPT doesn’t judge—it lets me experiment, refine awkward phrasing, and build confidence before hitting send.

Still, I’m cautious. Journalism already leans too hard on tools that promise speed. If AI starts shaping how stories are told—or worse, which stories get told—we risk losing the creativity, challenge, and friction that make reporting meaningful. For now, AI is just an assistant. The direction? That’s still up to us.

Author’s note: I wrote the initial draft myself, drawing on real experiences. Then I used ChatGPT to polish the flow, clarify phrasing, and refine the style—prompting it to rewrite in a natural, Guardian-esque tone. AI helped, but the ideas and voice remain mine.Does AI Come at an Environmental Cost?

Frances Briggs

Manchester-based science website editor

AI is undeniably powerful—it’s a remarkable technological leap forward, and I’d be naive to think otherwise. But I have concerns. I worry about my job disappearing in five years, and I’m deeply troubled by AI’s environmental footprint.

Understanding AI’s true impact is challenging because major players guard their data closely. What’s clear, though, is that the situation isn’t good. A recent study revealed some alarming figures (joining others with similar findings). The research focused on just one example: OpenAI’s ChatGPT-4o model. Its annual energy use equals that of 35,000 homes—roughly 450,000 kWh, or the consumption of 325 universities, or 50 U.S. hospitals.

And that’s just the beginning. Cooling these supercomputers’ processors adds another layer of strain. Social media buzzes with shocking stats about AI’s data centers, and they’re not far from reality. Estimates suggest ChatGPT-4o’s cooling alone requires about 2,500 Olympic-sized swimming pools of water.

Smaller AI tools like Perplexity or Claude don’t seem as energy-intensive. Globally, AI still accounts for less than 1% of total energy use. But in places like Ireland, data centers consumed 22% of the country’s electricity last year—more than all urban households combined. With over 6,000 data centers in the U.S. alone, and AI adoption skyrocketing since 2018, these numbers could look very different in a year.

Despite the grim stats, I hold out hope. Researchers are already developing more efficient, cost-effective processors using nanomaterials and other innovations. Compared to early language models from seven years ago, today’s versions are far less wasteful. Energy-hungry data centers will improve—experts just need time to figure out how.

—

If AI Plays Matchmaker, Will I Know Who I’m Really Dating?

Saranka Maheswaran

London-based student and aspiring journalist

“Get out there, meet people, and date, date, date!” is the advice I hear most as a 20-something. After a few awkward encounters and plenty of post-date gossip sessions, a new fear crept in: What if they’re using AI to message me?

Overly polished responses or oddly perfect conversation starters first made me suspicious. I’m not anti-AI—resisting it entirely won’t stop its rise—but I worry about our ability to form real connections.

For a generation already insecure about how they communicate, AI is a tempting crutch. It might start with a simple request—“Make this message sound friendlier”—but it can spiral into dependence, eroding confidence in your own voice. A 2025 Match.com study found that 1 in 4 U.S. singles have used AI in dating.

Maybe I’m too cynical. But to anyone unsure about how they come across in messages: trust that if it’s meant to be, it’ll happen—without letting AI do all the talking.Finding Balance in the Age of AI

Iman Khan

Final-year student at the University of Cambridge, specializing in social anthropology

The rise of AI in education has made me question the notion of impartial or neutral knowledge. In this new era, we must critically examine every piece of information we encounter—especially in universities, where AI increasingly supports teaching and learning. While we can’t separate AI from education, we must scrutinize the systems and narratives that shape its development and use.

My first experience with AI in education was asking ChatGPT for reading recommendations. I expected it to function like an advanced search engine, but I quickly realized its tendency to “hallucinate”—presenting false or misleading information as fact. At first, I saw this as a minor obstacle in an otherwise promising tool, assuming it would improve over time. However, it’s now clear that AI chatbots like ChatGPT and Gemini contribute to the spread of misinformation.

AI has made the relationship between humans and technology more uncertain. We need research into how AI affects the social sciences and how it integrates into our learning and daily lives. I want to explore how we adapt to AI not just as a tool but as an active participant in society.

—

AI as a Creative Partner in Architecture

Nimrah Tariq

London-based graduate specializing in architecture

In my early university years, we were discouraged from using AI for architecture essays and models, except for proofreading. But by my final year, AI became a key part of our design process—helping with rendering and refining our work.

Our studio tutor taught us how to craft detailed AI prompts for platforms like Visoid, turning our sketches into concept designs. This expanded my ideas and gave me more creative options. While AI was useful in the conceptual phase, inaccurate prompts led to poor results, so we learned to be more precise. I mainly used it for final touches, enhancing rendered images.

Initially, AI didn’t influence my design process much—I relied on existing buildings for inspiration. But later, it introduced new innovations, speeding up experimentation and pushing creative boundaries. Now, I see AI as a tool that enhances—not replaces—human creativity.

As I start my career, I’m excited to see how AI transforms architecture. Firms already prioritize AI skills in job applicants, and its impact on design is undeniable. Staying updated with technology has always been crucial in architecture—AI has only reinforced that.

Panel compiled by Sumaiya Motara and Saranka Maheswaran, interns on the Guardian’s positive action scheme.

FAQS

### **FAQs: Generation Z, AI, and the Future**

#### **Basic Questions**

**1. What is Generation Z?**

Generation Z refers to people born between the mid-to-late 1990s and early 2010s. They grew up with the internet, smartphones, and social media.

**2. How is AI shaping Gen Z’s future?**

AI is changing education, jobs, and daily life—from personalized learning to automation in careers. Gen Z will likely work alongside AI in many fields.

**3. Will AI take away jobs from Gen Z?**

Some jobs will be automated, but AI will also create new roles. Adapting skills will be key.

**4. Is AI safe for Gen Z to use?**

Generally, yes—but privacy, misinformation, and over-reliance are concerns. Learning to use AI responsibly is important.

—

#### **Benefits of AI for Gen Z**

**5. How can AI help Gen Z in education?**

AI tutors, personalized learning apps, and instant research tools make studying more efficient and tailored to individual needs.

**6. Can AI improve mental health for Gen Z?**

Yes—AI chatbots offer support, but they shouldn’t replace human professionals for serious issues.

**7. Will AI make life easier for Gen Z?**

In many ways, yes! AI can automate boring tasks, improve healthcare, and help with creative projects.

—

#### **Risks and Challenges**

**8. What are the biggest dangers of AI for Gen Z?**

Job disruption, deepfake scams, addiction to AI tools, and biased algorithms are key risks.

**9. Can AI increase inequality for Gen Z?**

Possibly—if access to AI tools isn’t equal, some may fall behind in education or job opportunities.

**10. Is AI making Gen Z lazier?**

If overused, yes. Relying too much on AI for thinking or creativity could reduce critical skills. Balance is important.

—

#### **Advanced Questions**

**11. How can Gen Z prepare for an AI-driven job market?**

Learn tech skills, but also soft skills—